Skillnad mellan versioner av "Oracle-OVM"

Js146669 (Diskussion | bidrag) (zone info) |

Js146669 (Diskussion | bidrag) (→Oracle OVM) |

||

| Rad 7: | Rad 7: | ||

- OS | - OS | ||

Solaris Zones/Containers either a Whole, Sparse or Kernel version | Solaris Zones/Containers either a Whole, Sparse or Kernel version | ||

| + | |||

| + | [[Fil:Oracle-OVM-Pic.jpg]] | ||

==General Information== | ==General Information== | ||

Versionen från 8 januari 2019 kl. 12.00

Innehåll

Oracle OVM

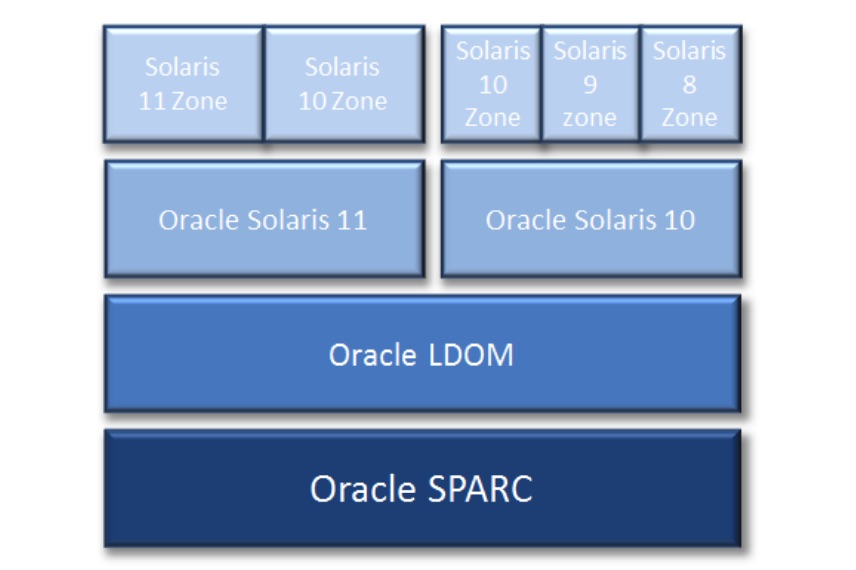

There are several types of virtualisation for Solaris which are covered by this generic name OVM (Oracle Virtual Machine)

- Hypervisor

Oracle LDOMs

- OS

Solaris Zones/Containers either a Whole, Sparse or Kernel version

General Information

OVM is included in Solaris 11 and Solaris 10 It does not require a RTU licence although a Support contract for each running guest domain will be required. The advantages for OVM are:

- provision bare metal hypervisors

- provision virtual machines

- Hard Partitioning. Oracles licence structure for Mulit-core & Multi-threaded environments. To avoid paying for all the cores in the platform hard partitioning is configured to licence just the cores that are used in the virtual instance.

- This is enforced by using CPU whole-core configurations.

- A CPU whole-core configuration has domains that are allocated CPU whole cores instead of individual CPU threads.

- When binding a domain in a whole-core configuration, the system provisions the specified number of CPU cores and all its CPU threads to the domain.

- Using a CPU whole-core configuration limits the number of CPU cores that can be dynamically assigned to a bound or active domain.

NB! By default, a domain is configured to use CPU threads.

- migrate virtual machines to different hosts either "Warm" or "Cold" migration.

- Guest domains can run Solaris 11 or Solaris 10. Zones can be then configured in the guest domain.

NB! this will though limit that guest domain to cold migration only

- ZFS on host domain allows for easy patch and backup/recovery policy.

- NFS volumes or SAN disk/iSCSI with cluster file-system to facilitate "Warm" migration.

For best practice migrtation the architecture requires the following components:

- A shared filesystem for hosting virtual machines to allow for node migration.

- Cold Migration involves stopping, manual migration and start of LDOM.

- Warm Migration allows for automatic migration with minimal downtime for the migration.

Having this shared file system (which we recommend be on a SAN, iSCSI (med Cluster FS) or GPFS allows us migrate nodes from).

- Solaris 10 (11/13) & 11 LDOMs

- Solaris 8,9 & 10 zones

- Running an < init 5 > on a primary domain with running guest domains will reboot the primary domain i.e it will act as an < init 6 >

OVM administration

- List all nodes running on a Hyerpvisor OVM-host

# ldm ls NAME STATE FLAGS CONS VCPU MEMORY UTIL NORM UPTIME primary active -n-cv- UART 2 16G 1.5% 1.5% 84d 34m guest-dom active -n---- 5001 16 28G 0.4% 0.4% 84d 29m

- Start/Stop a guest domain

ldm start <guest-domain> ldm stop <guest-domain>

Useful commands for instances on Hypervisor OVM host

ldm <- display help for ldm ldm list-services <-- display assigned disk, net, cpu etc... ldm list-constraints <-- display cpu cores, max-cores etc... ldm list-variable <domain> <-- display boot params for domain ldm list -o resmgmt <domain> <-- determine if domain configured with whole-cores for hard partitioning.

Useful commands for instances on OS OVM-host

zoneadm list -icv <-- View all of zones configured on the host zoneadm -z <zonename> boot/reboot/halt zonecfg -z <zonename> info <--display zones configuration zonecfg -z <zonename> export <-- export zone configuration to file zlogin <zonename> <--login to a zone zlogin -C <zonename> <-- login to zone's console